Rishi Malhotra

Computer Science @ Cornell University

Interested in Machine Learning, Data Science and Software Engineering opportunities

Featured Internship Project: Real Time Crowd Counting

Projects and Experience

Covid-19 hospitalizations

Applied vanilla neural networks and AdaBoost + Decision Trees to predict future Covid-19 Hospitalization rates with 91809 mean squared error, ranking 8th on the Kaggle competition leaderboard.

May 2021

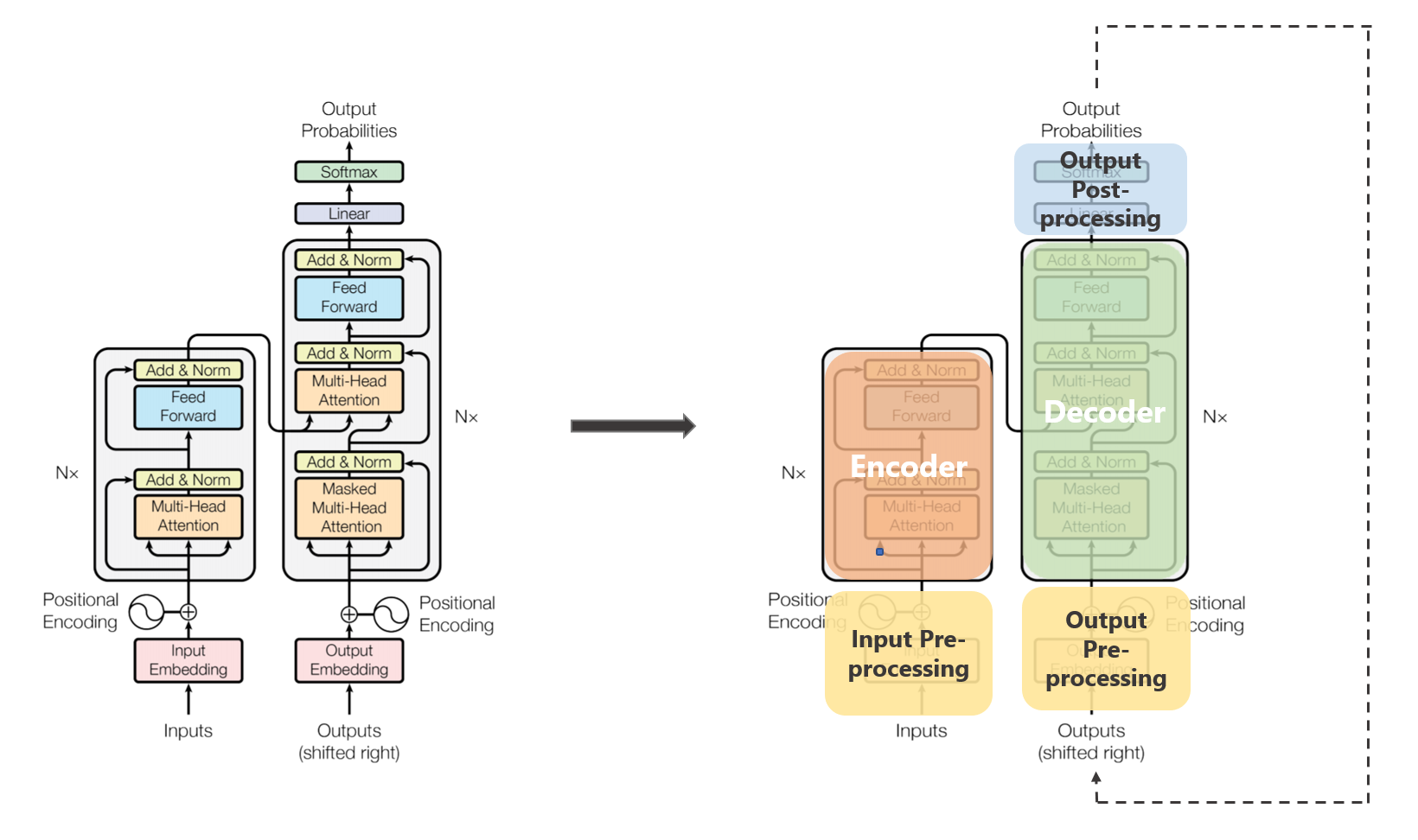

Closed-Domain Question Answering

Interpreted state-of-the-art NLP papers “Attention is All You Need” and “Reading Wikipedia to Answer Open- Domain Questions” (DrQA) to develop a transformer-based solution with a 46.04 Exact Match score and a DrQA-inspired solution with a 45.01 Exact Match score on the SQuAD 2.0 challenge.

January 2021

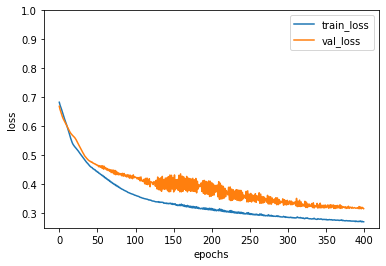

Real Time Crowd Counting

Computer vision/object detection algorithms and multithreaded software for real time crowd counting in low and high density crowds. Created for my internship at Plugout.

August 2020

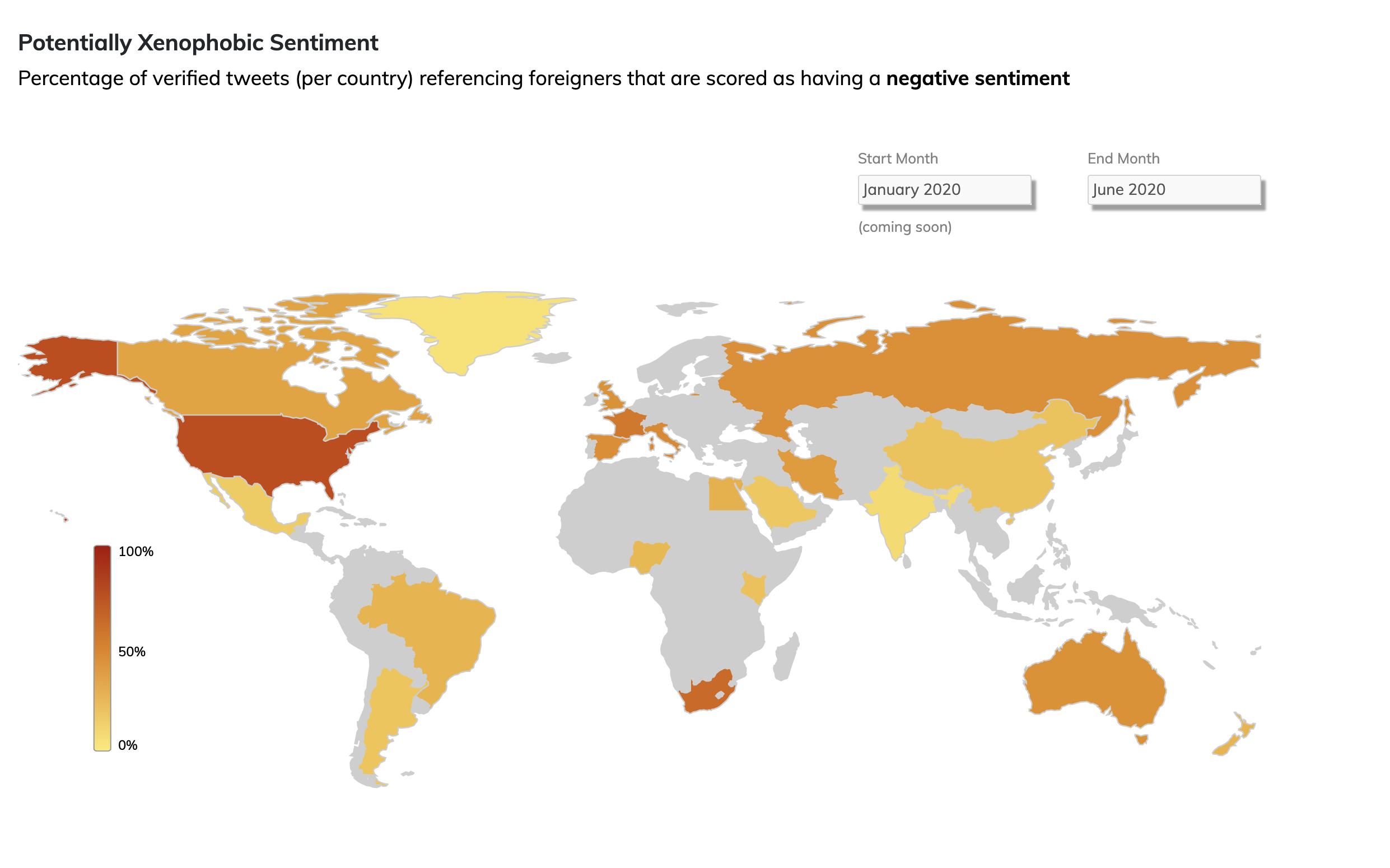

Xenophobic Meter Project

A Natural Language Processing Application that identifies xenophobic tweets with 96% accuracy.

December 2019

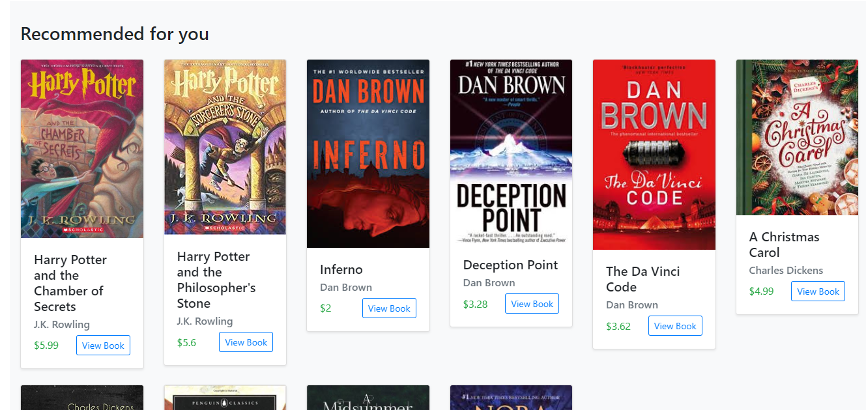

Book Schmo

A book e-commerce web application with buyer and seller functionalities. Includes an integrated front-end and back-end.

March 2019

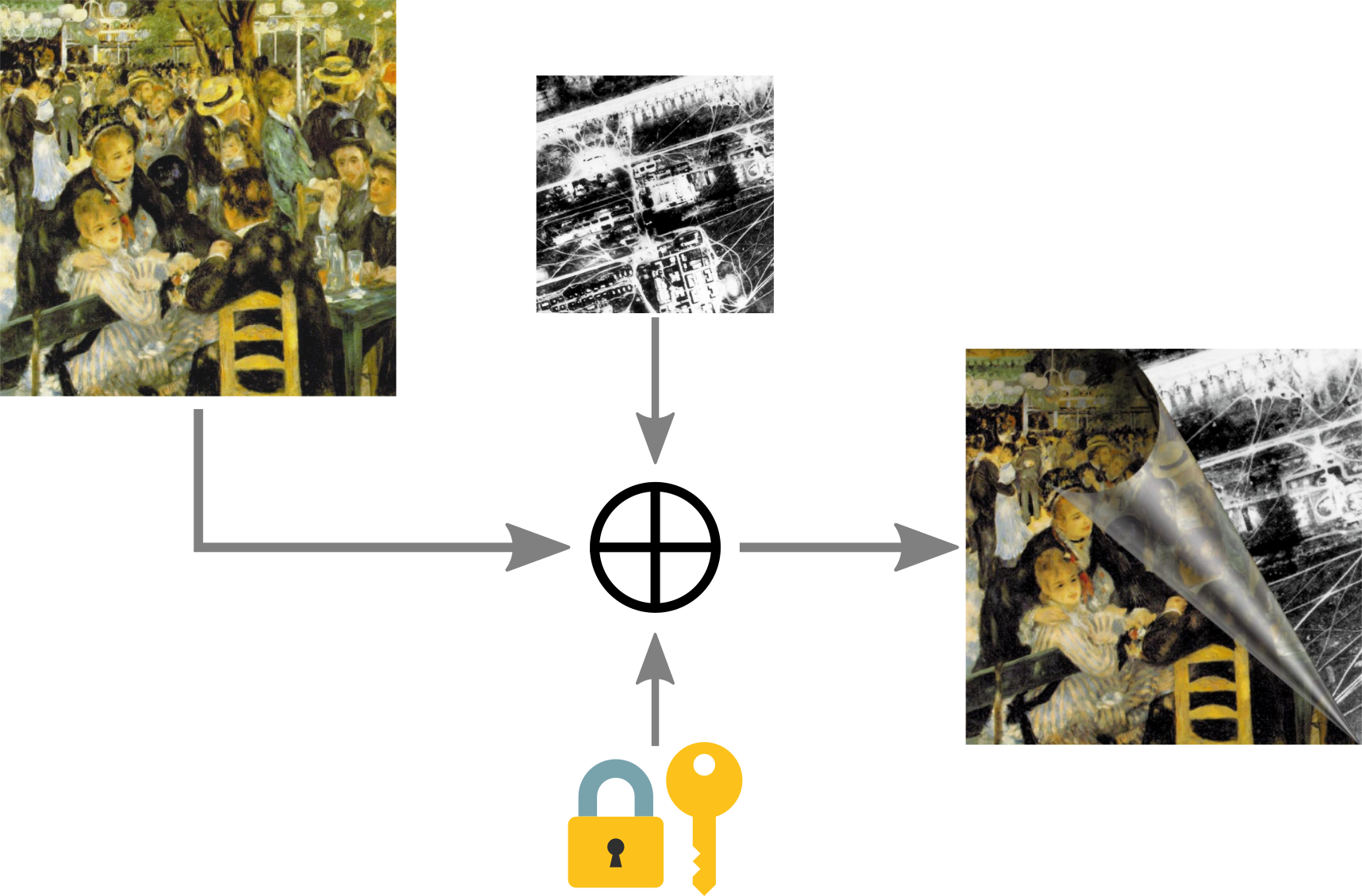

Image Steganalysis Machine Learning Classifier

A deep learning classifier that detects secret hidden data within digital images as part of a Kaggle competition.

May 2020

CAD Design Automation - Cornell Hackathon

Scripts that automate CAD design. Finalists CU Make-a-thon 2020.

February 2020

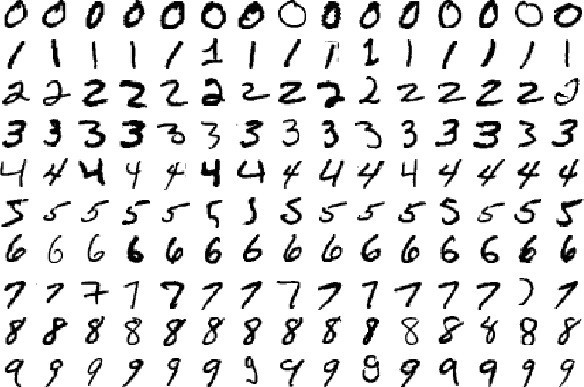

Photo OCR Application

A machine learning application that classifies hand-written characters

June 2020

Email Spam Classifier

A deep learning application that classifies email as spam or ham.

June 2020

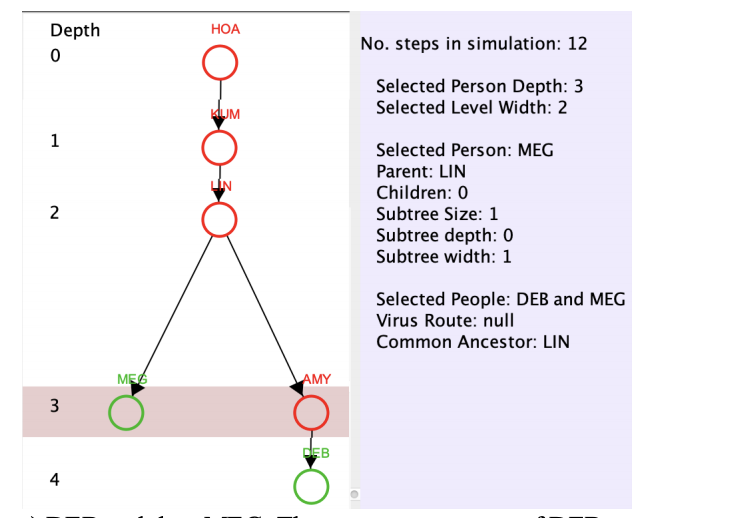

Modelling viruses

A simulation of how an infectious virus spreads with variable parameters

February 2020

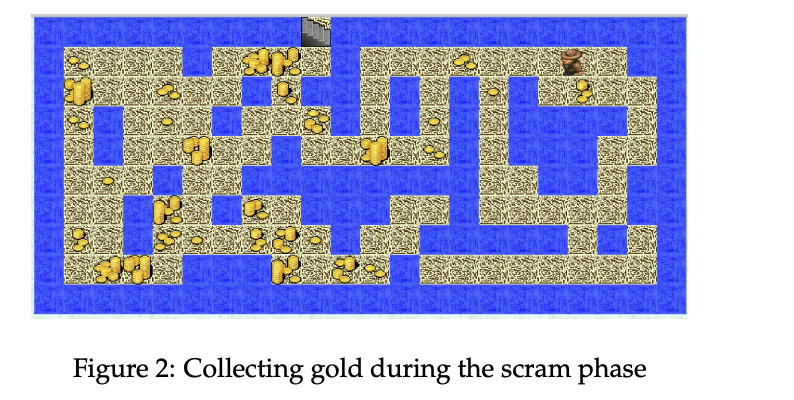

Cavern Under Gates

A game that calculates the shortest path that allows a player to scram from the start to the end of a maze.

April 2020

C1C0-Chatbot

A chatbot running on an AWS Instance. Built for the Cornell Cup Robotics Project Team.

April 2020

I do

Python, SQL, Java, OCaml, HTML, CSS, PHP, JavaScript

and PyTorch, NumPy, Pandas, Scikit-learn, Matplotlib, Unittest

and Unix/Linux and Git

Certifications

Neural Networks and Deep Learning

This course provides an understanding of the major technology trends driving Deep Learning. Topics include (i) being able to build, train and apply fully connected deep neural networks (ii) implementing efficient (vectorized) neural networks (iii) understanding the key parameters in a neural network's architecture.

Deep Learning Specialization

The specialization teaches the foundations of Deep Learning, understanding how to build neural networks, and learn how to lead successful machine learning projects. Topics include Convolutional networks, RNNs, LSTM, Adam, Dropout, BatchNorm, Xavier/He initialization, and case studies from healthcare, autonomous driving, sign language reading, music generation, and natural language processing. Course taught in Python TensorFlow.

Convolutional Neural Networks

This course will teach you how to build convolutional neural networks and apply it to image data. Topics include (i) understanding how to build a convolutional neural network, including recent variations such as residual networks. (ii) knowing how to apply convolutional networks to visual detection and recognition tasks. (iii)knowing how to use neural style transfer to generate art. (iv) being able to apply these algorithms to a variety of image, video, and other 2D or 3D data.

Sequence Models

This course will teach you how to build models for natural language, audio, and other sequence data. Topics include (i) understanding how to build and train Recurrent Neural Networks (RNNs), and commonly-used variants such as GRUs and LSTMs (ii) being able to apply sequence models to natural language problems, including text synthesis. (iii) being able to apply sequence models to audio applications, including speech recognition and music synthesis.

Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization

This course teaches industry best-practices for building deep learning applications. Topics include (i) being able to effectively use the common neural network "tricks", including initialization, L2 and dropout regularization, Batch normalization, gradient checking, (ii) being able to implement and apply a variety of optimization algorithms, such as mini-batch gradient descent, Momentum, RMSprop and Adam, and check for their convergence. (iii) understanding new best-practices for the deep learning era of how to set up train/dev/test sets and analyze bias/variance. (iv) being able to implement a neural network in TensorFlow.

Structuring Machine Learning Projects

This course teaches how to build a successful machine learning project. Topics include (i) understanding how to diagnose errors in a machine learning system (ii) being able to prioritize the most promising directions for reducing error (iii) understanding complex ML settings, such as mismatched training/test sets, and comparing to and/or surpassing human-level performance. (iv) knowing how to apply end-to-end learning, transfer learning, and multi-task learning

Machine Learning

This course provides a broad introduction to machine learning, datamining, and statistical pattern recognition. Topics include: (i) Supervised learning (parametric/non-parametric algorithms, support vector machines, kernels, neural networks). (ii) Unsupervised learning (clustering, dimensionality reduction, recommender systems, deep learning).

About me

I am a rising junior studying Computer Science at Cornell University. I am interested in Machine Learning, NLP and Computer Vision. I am currently working as a Data Science intern at Microsoft. I have produced scalable multithreaded software for my internship with PLUGOUT and have developed accurate Natural Language Processing (NLP) solutions for my research with Cornell University.

Having previously worked with machine learning algorithms and big data, I am well-versed with MySQL for databases and PyTorch for Machine Learning. I have achieved excellent results on the Image-Steganalysis-2 Kaggle machine learning competition, and I have completed several personal machine learning projects such as a closed-domain question-answering system and a Covid-19 hospitalizations prediction project.